Hello everyone

I hope you're doing weel.

Last week, I was doing a test using Data Guard Broker and at the end of the switchover I got the error bellow:

switchover was not sucessful - Timeout

These problems in my alert.log were the main cause of the error, propably:

LAD:2 network reconnect abandoned

<error barrier> at 0x7ffcb2af9bf0 placed krsl.c@6774

ORA-03135: connection lost contact

*** 2023-10-04 09:57:23.145275 [krsh.c:6348]

When I checked my databases - Primary and DG, the switchover was completed with success.

select

inst_id, database_role, open_mode, log_mode, flashback_on, force_logging

from

gv$database;

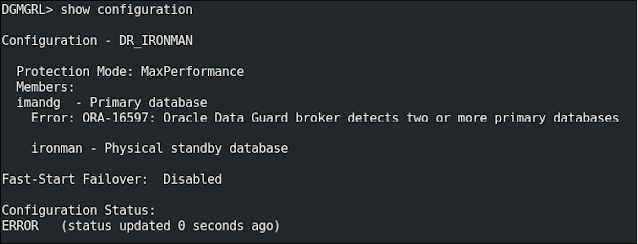

Everything was Ok, but when I checked my DG Broker, the error bellow occurred.

show configuration

Okay, I admit that I thought: I'm a smart guy, a smart DBA, an Oracle ACE, so I'm just remove the configuration and that's it! 😄

Well done!!! Emotional damage!!! I failed, miserably!!! 😅😅😅

remove configuration;

The solution to this was, but always remember:

- This step-by-step worked for me, but it may not work for you.

- It's a basic and limited environment. The real life will be different, for sure.

- This post is for study and testing as well, and has no concern for performance and security best practices.

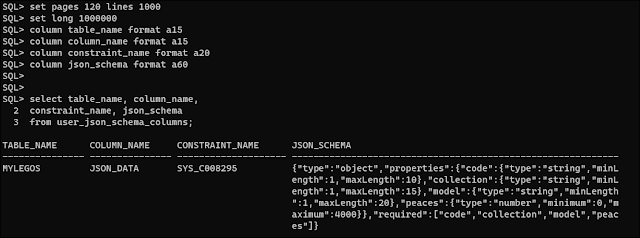

1) Check the location of the DG Broker config files

show parameter dg_broker_config_file

2) Change the dg_broker_start parameter in both environments

alter system set dg_broker_start=false scope=both sid='*';

3) Remove DG Broker config files

rm -f /u01/app/oracle/product/19.3.0.0/db_1/dbs/dr1IMANDG.dat

rm -f /u01/app/oracle/product/19.3.0.0/db_1/dbs/dr2IMANDG.dat

4) Check if the configuration was removed

show configuration

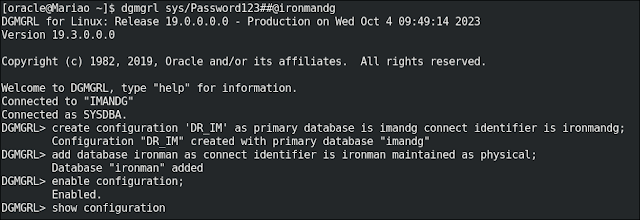

5) At the end, I'll recreate the DG Broker configs

create configuration 'DR_IM' as primary database is imandg connect identifier is ironmandg;

add database ironman as connect identifier is ironman maintained as physical;

enable configuration;

show configuration verbose;

Configuration - DR_IM

Protection Mode: MaxPerformance

Members:

imandg - Primary database

ironman - Physical standby database

Properties:

FastStartFailoverThreshold = '30'

OperationTimeout = '30'

TraceLevel = 'USER'

FastStartFailoverLagLimit = '30'

CommunicationTimeout = '180'

ObserverReconnect = '0'

FastStartFailoverAutoReinstate = 'TRUE'

FastStartFailoverPmyShutdown = 'TRUE'

BystandersFollowRoleChange = 'ALL'

ObserverOverride = 'FALSE'

ExternalDestination1 = ''

ExternalDestination2 = ''

PrimaryLostWriteAction = 'CONTINUE'

ConfigurationWideServiceName = 'ironman_CFG'

Fast-Start Failover: Disabled

Configuration Status:

SUCCESS

Yes, it's simple and work's very well!

I'm hope this post helps you.

Regards

Mario